Load

This sync can be used to load data from your data warehouse into any REST API friendly data destination.

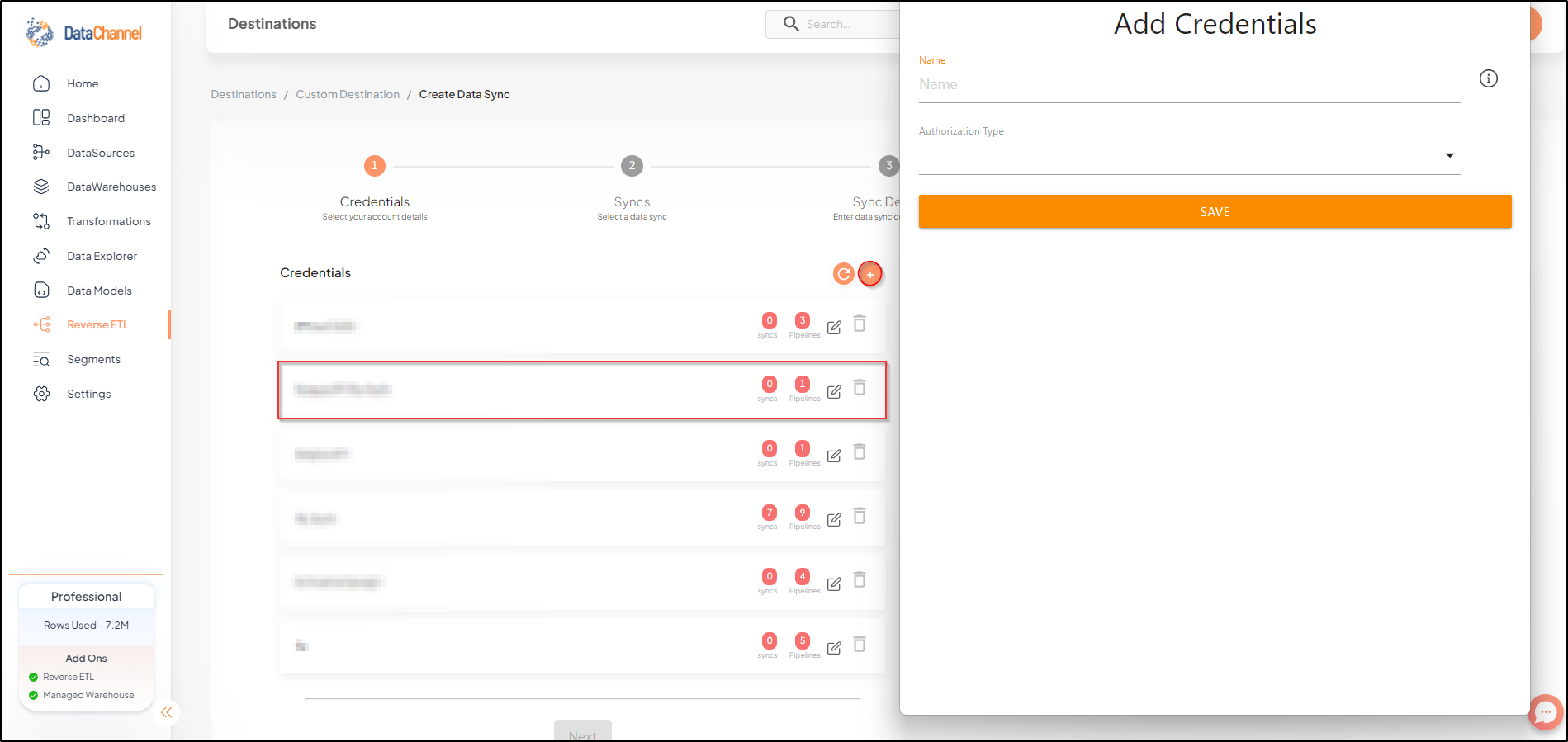

Configuring the Credentials

Select the account credentials which has access to relevant REST API friendly data destination from the given list & Click Next

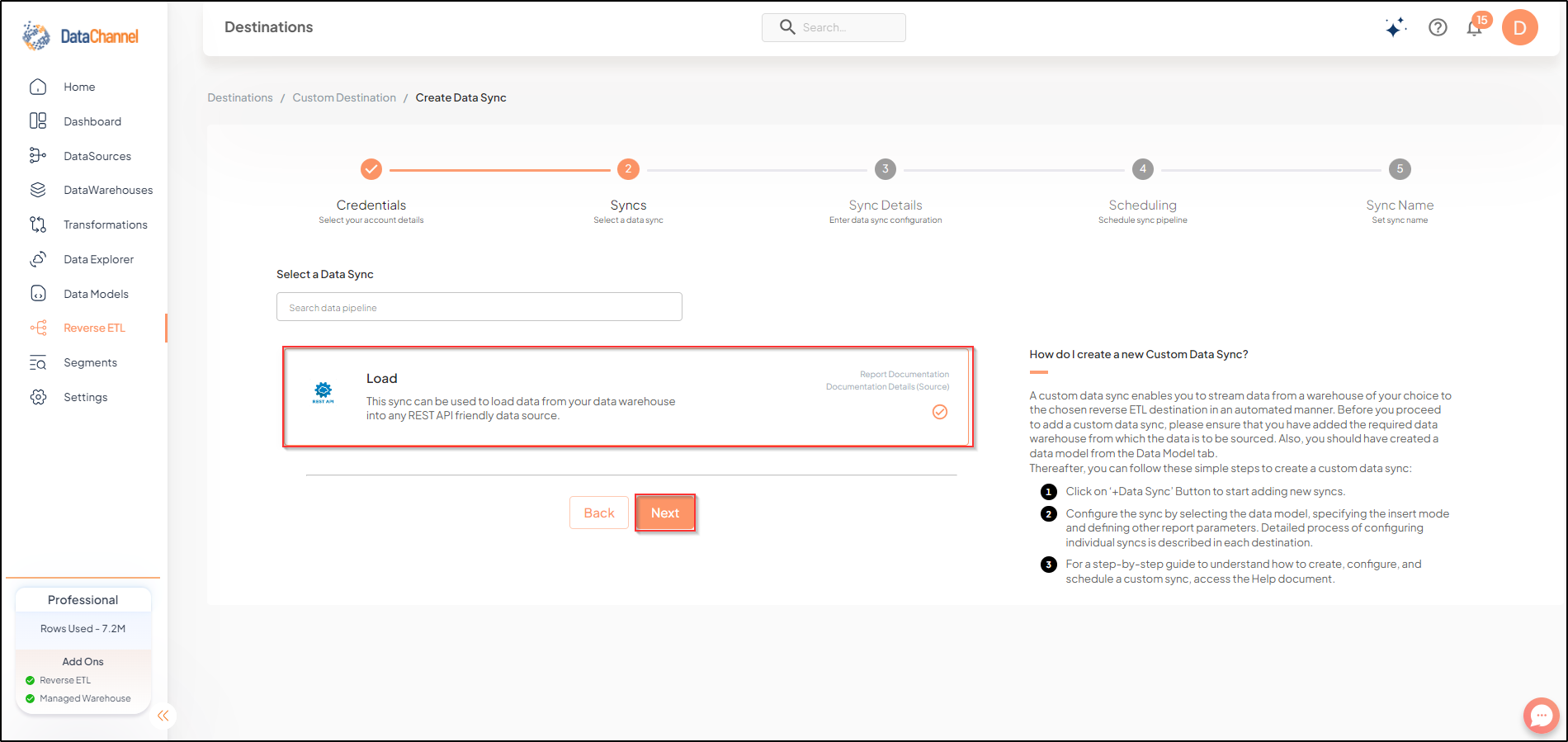

Data Sync Details

- Data Sync

-

Select Accounts & click Next

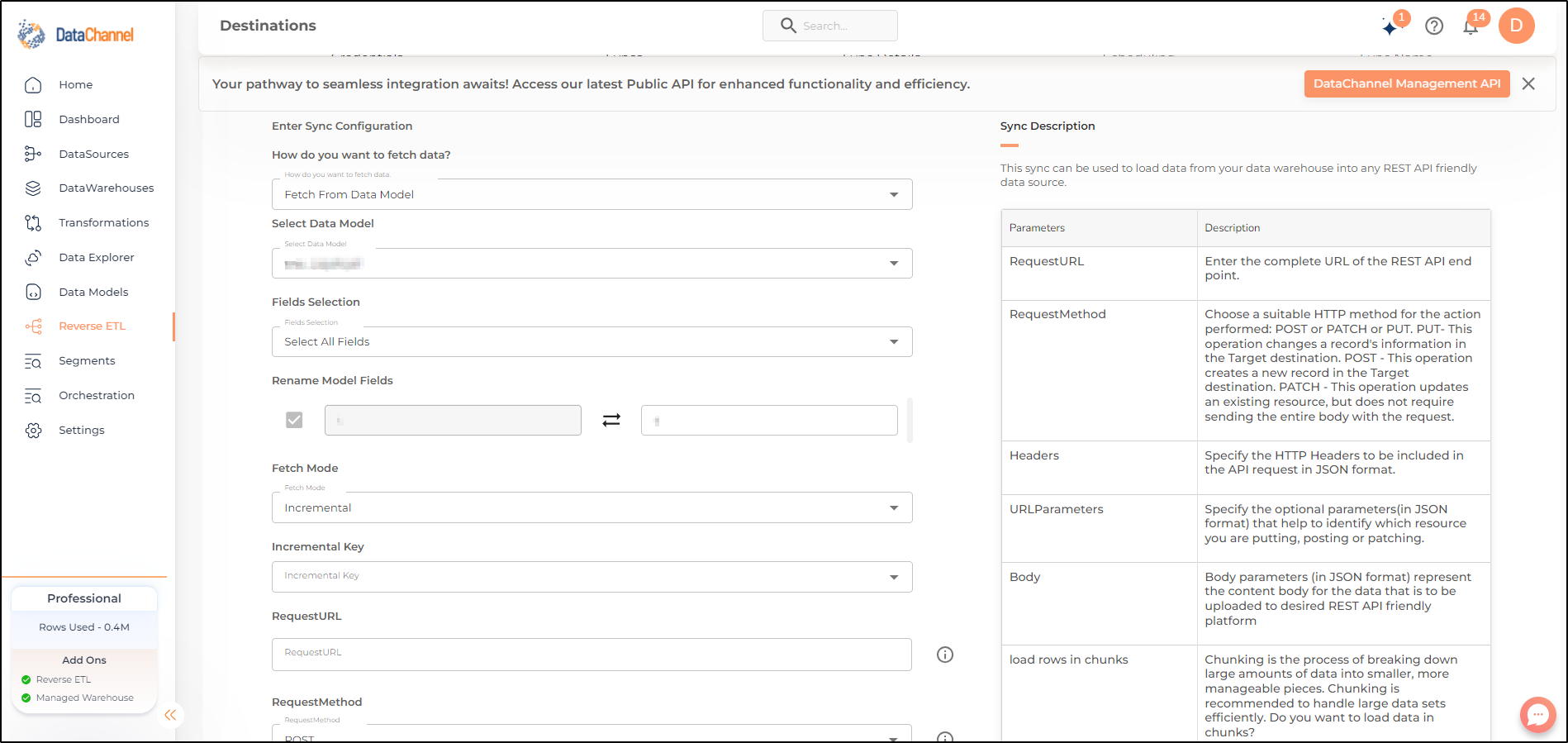

- How do you want to fetch data?

-

Select whether you want to fetch data from the Data Model or from Table/ View.

- Data Model

-

In case you want to fetch data using Data Model, select the data model that you would like to use for this sync. Checkout how to configure a model here.

- Data Warehouse

-

In case you want to fetch data using Table/ View, select the data warehouse that you would like to use for this sync.

- Table / View

-

Select the Table/ view in the data warehouse that you would like to use for this sync.

- Request URL

-

Enter the complete URL of the REST API end point.

- Headers

-

Specify the HTTP Headers to be included in the API request in JSON format.

- URLParameters

-

Specify the optional parameters(in JSON format) that help to identify which resource you are putting, posting or patching.

- Body

-

Body parameters (in JSON format) represent the content body for the data that is to be uploaded to desired REST API friendly platform.

Setting Parameters

| Parameter | Description | Values |

|---|---|---|

Request method |

Required::

Choose a suitable HTTP method for the action performed: * * * |

|

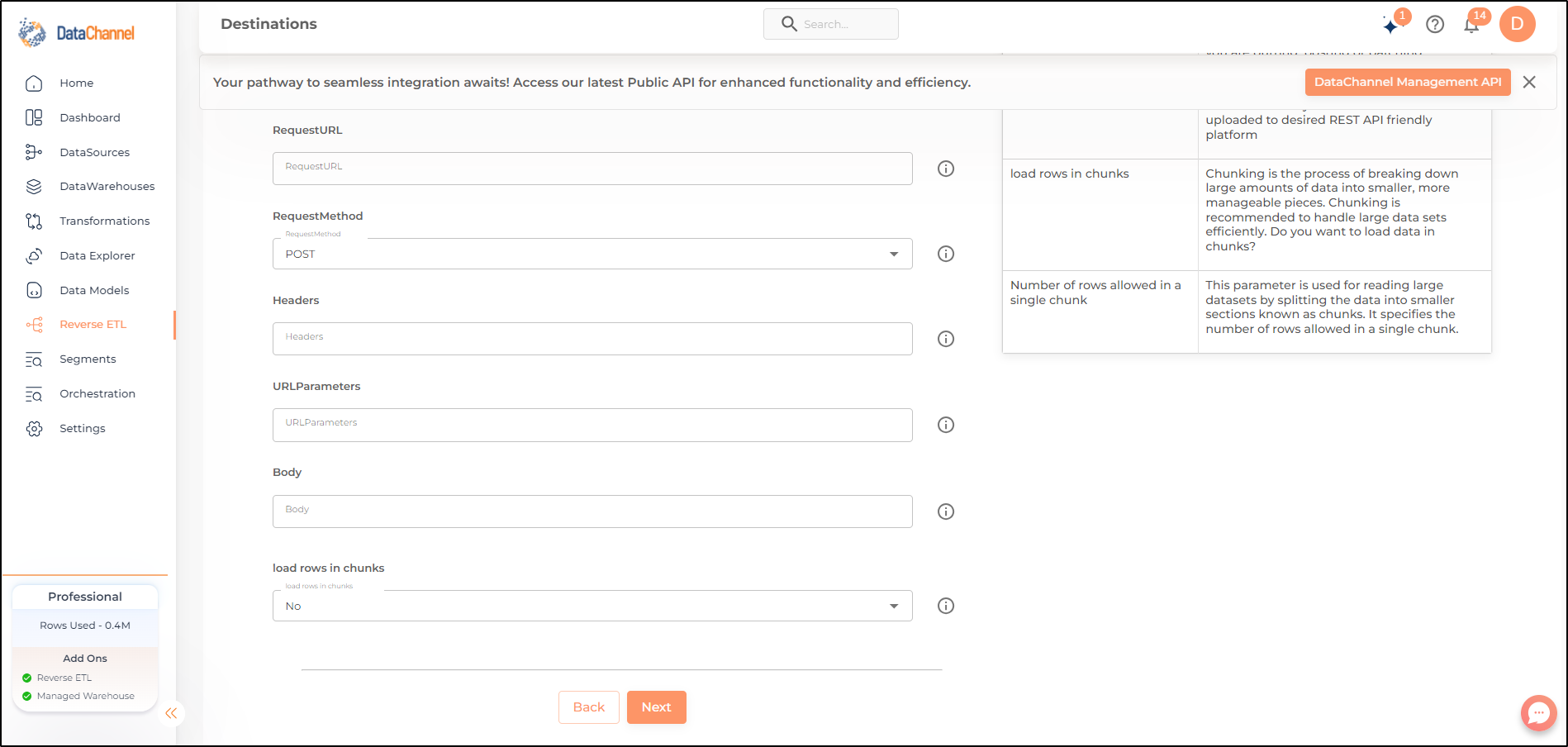

Load Rows in Chunks |

Required Chunking is the process of breaking down large amounts of data into smaller, more manageable pieces. Chunking is recommended to handle large data sets efficiently. Do you want to load data in chunks? |

{Yes, No} |

Number of rows allowed in a single chunk Dependant |

Required (If Chunking = Yes) This parameter is used for reading large datasets by splitting the data into smaller sections known as chunks. It specifies the number of rows allowed in a single chunk. |

Integer value |

Data Sync Scheduling

Set the schedule for the sync to run. Detailed explanation on scheduling of syncs can be found here

Dataset & Name

Give your sync a name and some description (optional) and click on Finish to save it. Read more about naming and saving your syncs including the option to save them as templates here

Still have Questions?

We’ll be happy to help you with any questions you might have! Send us an email at info@datachannel.co.

Subscribe to our Newsletter for latest updates at DataChannel.